Antwort Does OpenAI use machine learning? Weitere Antworten – What kind of learning is used at OpenAI

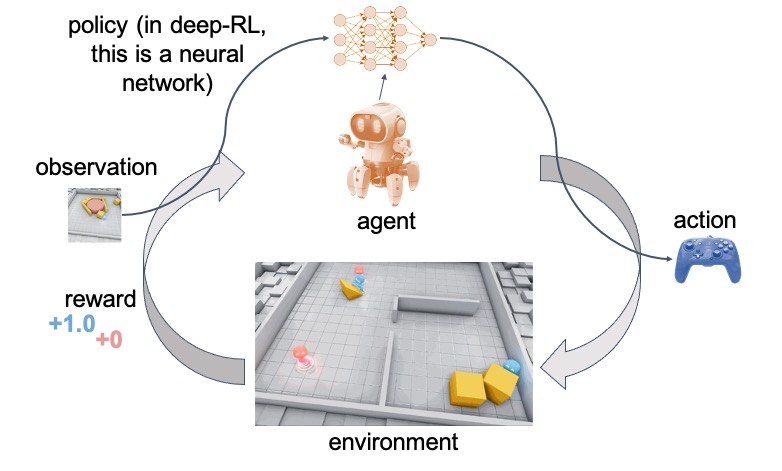

We solved the challenge with deep reinforcement learning (RL), crazy amounts of domain randomization, and no real-world training data. More importantly, we conquered the challenge as a team. From simulation and RL training to vision perception and hardware firmware, we collaborated so closely and cohesively.Deep Learning: OpenAI utilizes deep learning models, such as GPT (Generative Pre-trained Transformer) models, known for their ability to understand and generate human-like text.OpenAI Five

The system uses a form of reinforcement learning, as the bots learn over time by playing against themselves hundreds of times a day for months, and are rewarded for actions such as killing an enemy and taking map objectives.

Is OpenAI built on TensorFlow : OpenAI and TensorFlow are two different entities in the field of artificial intelligence and machine learning. OpenAI is a research organization focused on creating safe and beneficial AI, while TensorFlow is an open-source library for building and training machine learning models.

Does OpenAI use PyTorch or TensorFlow

OpenAI uses PyTorch, which was developed at FAIR. PyTorch 2.0 uses the Triton back-end compiler which was developed at OpenAI. OpenAI use transformers and RLHF which originated at Google & DeepMind.

Is OpenAI a neural network : Architecture. Each OpenAI Five bot is a neural network containing a single layer with a 4096-unit LSTM that observes the current game state extracted from the Dota developer's API.

OpenAI is an AI research and deployment company dedicated to ensuring that general-purpose artificial intelligence benefits all of humanity. We push the boundaries of the capabilities of AI systems and seek to safely deploy them to the world through our products.

Python

OpenAI's libraries, such as the widely used TensorFlow, are mainly designed to be used with Python, and hence, the performance of the model when using other languages cannot be guaranteed.

What data is OpenAI trained on

Text datasets: OpenAI used pre-existing text datasets, such as books, news articles, and scientific papers, to train its language models.Does OpenAI use PyTorch or TensorFlow OpenAI uses PyTorch to standardize its deep learning framework as of 2020.Does OpenAI use PyTorch or TensorFlow OpenAI uses PyTorch to standardize its deep learning framework as of 2020.

OpenAI provides a custom Python library which makes working with the OpenAI API in Python simple and efficient.

Does GPT use neural networks : More specifically, the GPT models are neural network-based language prediction models built on the Transformer architecture. They analyze natural language queries, known as prompts, and predict the best possible response based on their understanding of language.

Is OpenAI really an AI : OpenAI is an AI research and deployment company. Our mission is to ensure that artificial general intelligence benefits all of humanity.

Where does OpenAI get its datasets

OpenAI obtains their data sets from a variety of sources, such as public data repositories, social media, and private data collections. They also use crowdsourcing techniques to collect data from a large number of people. Finally, OpenAI also creates their own data sets using machine learning techniques.

PyTorch

OpenAI uses PyTorch, which was developed at FAIR. PyTorch 2.0 uses the Triton back-end compiler which was developed at OpenAI. OpenAI use transformers and RLHF which originated at Google & DeepMind.While TensorFlow is used in Google search and by Uber, Pytorch powers OpenAI's ChatGPT and Tesla's autopilot. Choosing between these two frameworks is a common challenge for developers. If you're in this position, in this article we'll compare TensorFlow and PyTorch to help you make an informed choice.

Is GPT AI or machine learning : Though it's accurate to describe the GPT models as artificial intelligence (AI), this is a broad description. More specifically, the GPT models are neural network-based language prediction models built on the Transformer architecture.